Persisting temporary accessible data via Power BI Datamarts with the example of Power BI Activity Logs

This post is also available in:

![]() German

German

Last week I had an interesting discussion with my friend and co-organizer of the Power BI User Group Switzerland, Kristian Bubalo. The question was whether it is possible to persist temporarily available data using only Power BI on-board tools and surprisingly, we discovered an extremely viable solution. As a more specific example, we had the discussion about data that is called via REST API and is only available for a certain period of time. For this article, I will use the data of the Power BI Activity Log as an example, which only provides the rolling values of the last 30 days for download.

Materialization of data requires query folding for incremental refresh

To materialize such data within the Power BI toolbox, the only option is to persist the data using incremental refresh. The crucial point, however, is that the incremental refresh only works with relational sources, since QueryFoling must be supported. Unfortunately, this is not the case with a REST API.

However, this is exactly what is now possible with Power BI Datamarts released in May 2022!

A short note in advance, I provide my entire solution for download at the end of the article.

Architecture

The setup is very easy to perform. We need only two elements:

- A Power BI Datamart to load daily data via REST API

- A Power BI Dataflow, which persists the values with incremental refresh

The data is loaded from the REST API into the Power BI Datamart. You can then apply an incremental refresh to the Azure SQL database of the datamart, since the Azure SQL database of the datamart supports QueryFolding and can therefore be loaded incrementally.

In this way, the Datamart serves as a staging area, while the Dataflow is used to persist the data. The REST API can ideally be loaded on a daily basis. The new data is then persisted by incremental refresh. The procedure is planned as follows:

Implementation

This article is mainly about persisting data that cannot be stored directly via incremental refresh and not about the connection to the REST API. This is why I will only roughly describe the steps to connect the Power BI Activity Log or I will link to the corresponding blogposts.

The implementation consists of 2 steps:

- Loading the data via REST API into the Power BI Datamart

- Loading the values from the datamart into the dataflow

Loading the Power BI Activity Log via Power BI Datamart

The loading of the activity logs could theoretically also be done with a personal account with admin rights. However, I would strongly recommend doing this with a service principal account. The first step is to create an Azure app that has read-only access. The following article explains how you can do this:

How to use a service principal in Power BI Admin REST APIs in Power Automate – Forward Forever

Loading data from the REST API can now be developed as usual in Power Query within Power BI Desktop. The only limitation in the datamart, as well as the dataflows, is that recursive functions are not possible at the current time. Recursive functions are functions that invoke themselves. The API for the activity log returns a continuation token on the first call. The API then has to be called as often as no more token is returned. For this reason, a recursive function would be the most clean solution. This would work in Power BI Desktop, but not in the cloud. If anyone from Microsoft is reading this, please enable recursive Power Query functions!

My solution approach simply calls the API 75 times and ignores the further calls as soon as no token is returned.

Integration of the REST API load into the Datamart

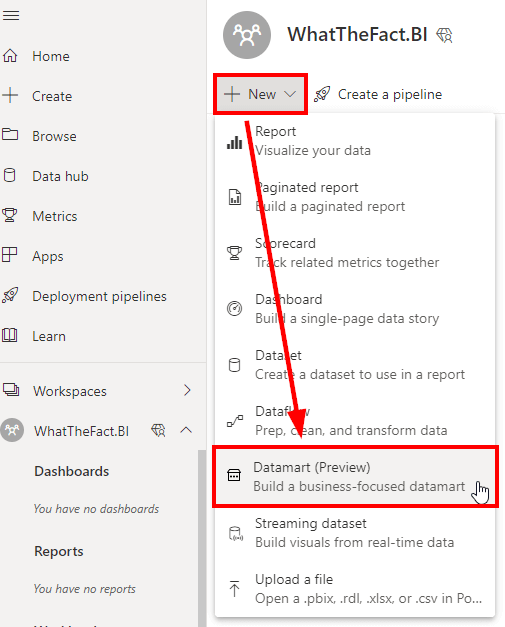

First we create a new datamart. We can create this by clicking on “New” and then “Datamart”:

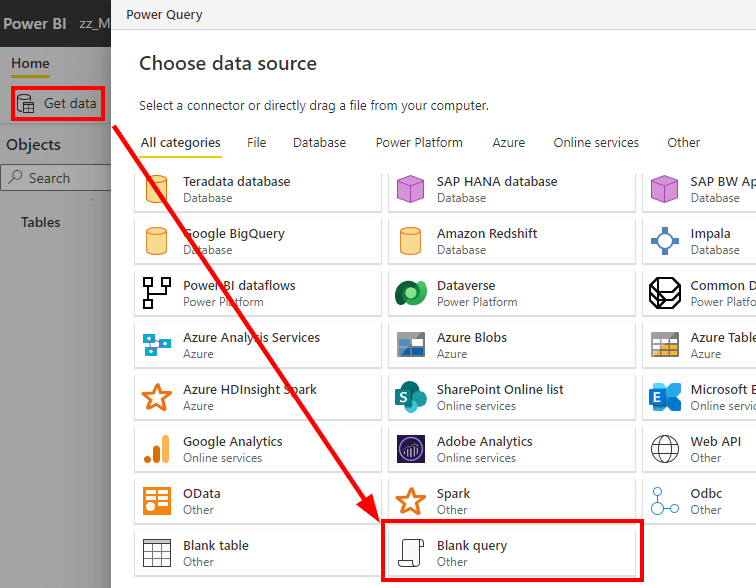

If the API connection is not yet developed, this can be done here. However, I personally find developing in PowerQuery within Power BI Desktop more convenient. In this scenario, I am assuming that we have already created the API integration in Power Query. This way, we can simply select an empty query as data source and then insert the queries from our PowerQuery within Power BI Desktop using Ctrl + C and Ctrl + V here:

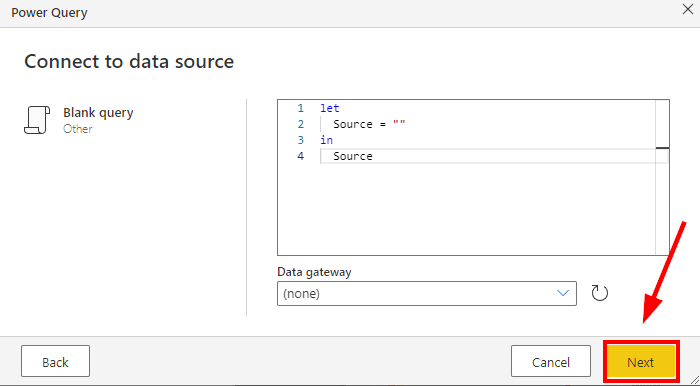

In the next step, we can just leave the query empty and click “Next”:

After that, the query can simply be copied from the Power BI file to the datamart. As mentioned above, the sample file can be downloaded at the end of the article:

After clicking on “Next” we are basically done with the datamart. We still need to rename the datamart, enable the automatic refresh and we should be ready to persist the data. When creating the Power BI datamart, there always will 2 elements created. First a datamart, which is based on Azure SQL, as well as a dataset, which contains the data model. In our case, we are only interested in the datamart, as we are not creating any reports based on the dataset. In the datamart we also need to enable automatic refresh:

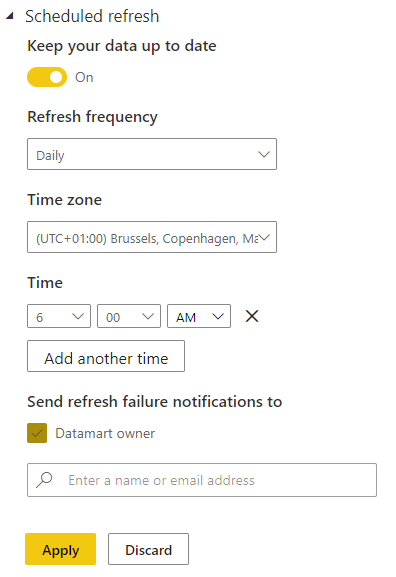

For example, we set the daily refresh to 6 am:

Persistence of data using the example of Power BI Dataflows

After the first manual refresh of the data, the first days are now loaded into the Power BI Datamart. Now we need to trigger the persistence of our data. We will create a Power BI Dataflow here, which will persist the data via incremental refresh. Currently, an incremental refresh with Power BI Dataflows requires a Premium or Premium per User license. However, there are also alternatives without a premium or premium per user workspace. I will talk about these in the chapter “Alternative solutions”.

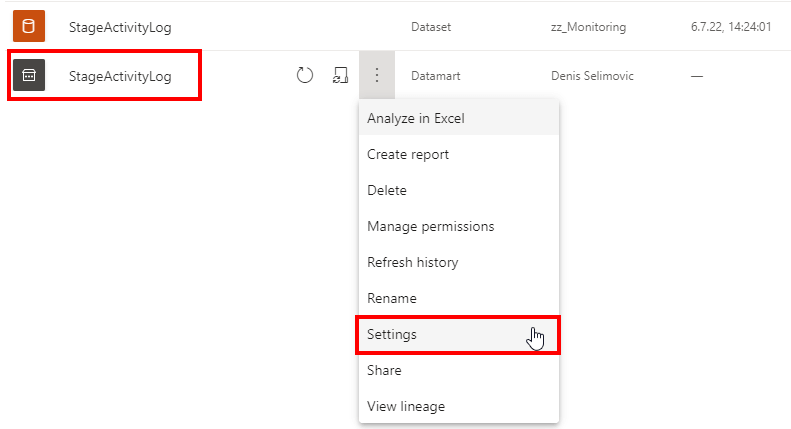

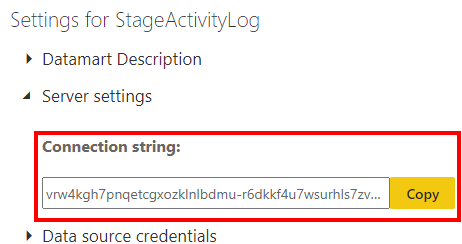

First we need the connection string to the datamart’s Azure SQL database. We can get this from the options of the datamart:

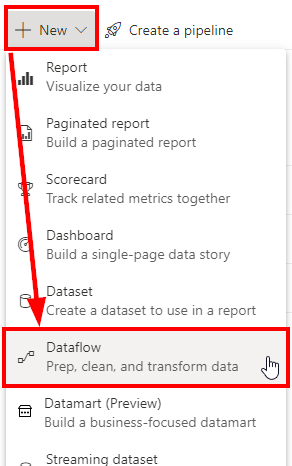

Now we create a new Power BI Dataflow:

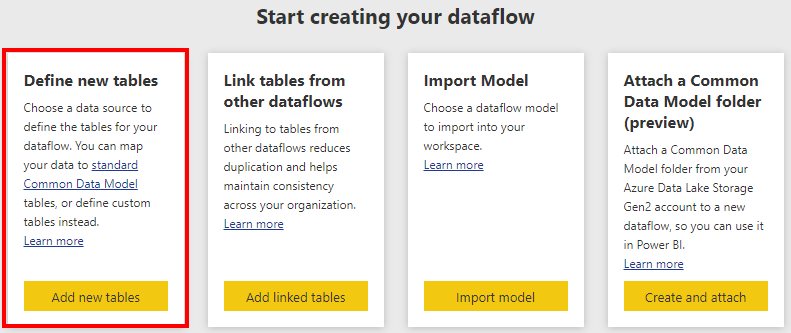

Then select “Add new tables”:

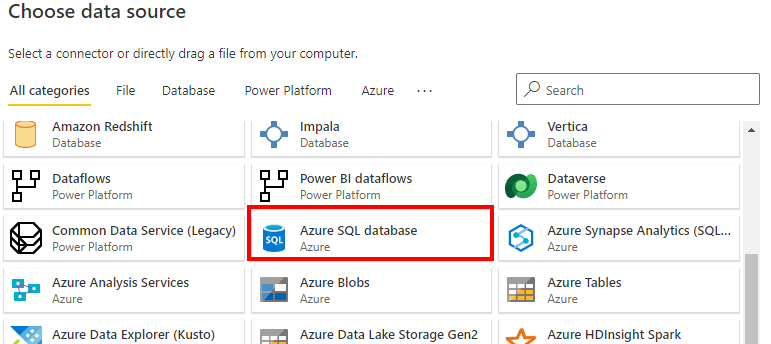

In the following dialog we select the Azure SQL database:

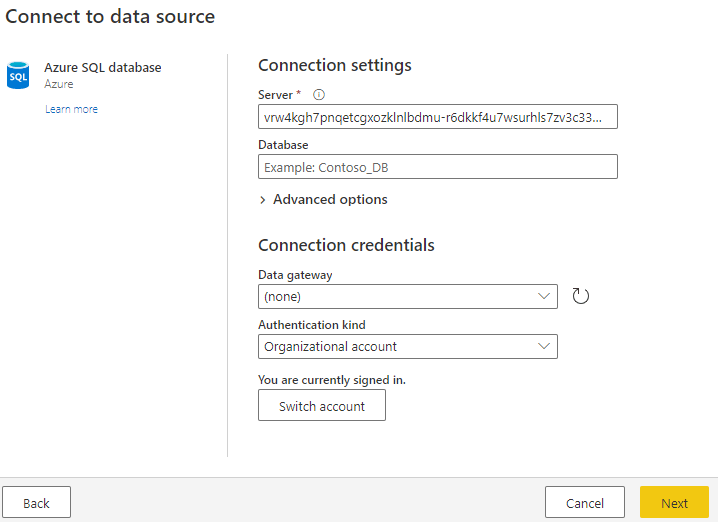

As server we paste the connection string that we copied from our datamart. We can leave the database field empty and as authentication we choose “Organizational account”. We might have to log in and with a click on “next” we are connected to our Datamart database:

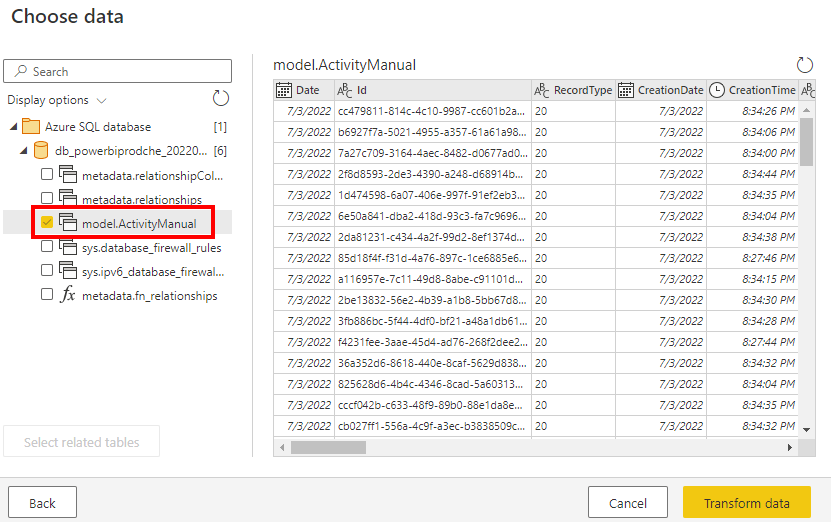

On the left side we select our “ActivityManual” table, which we created in the Datamart. All our tables created there appear in the schema “model”. After that we click on “Transform data”:

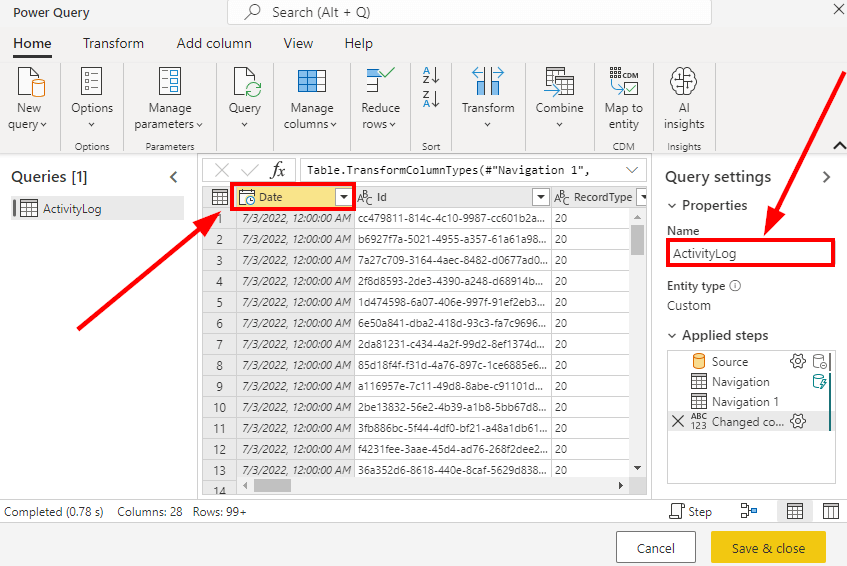

After that PowerQuery opens in the browser. In general we don’t have to make any changes, only we have to make sure that our date column is of type DateTime. We can also rename the table if we don’t like the original name:

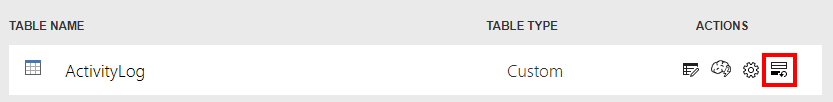

After confirmation with “Save & close”, the dataflow has also been set up. Now we have to set up the incremental refresh. To do this, we click on the incremental refresh icon:

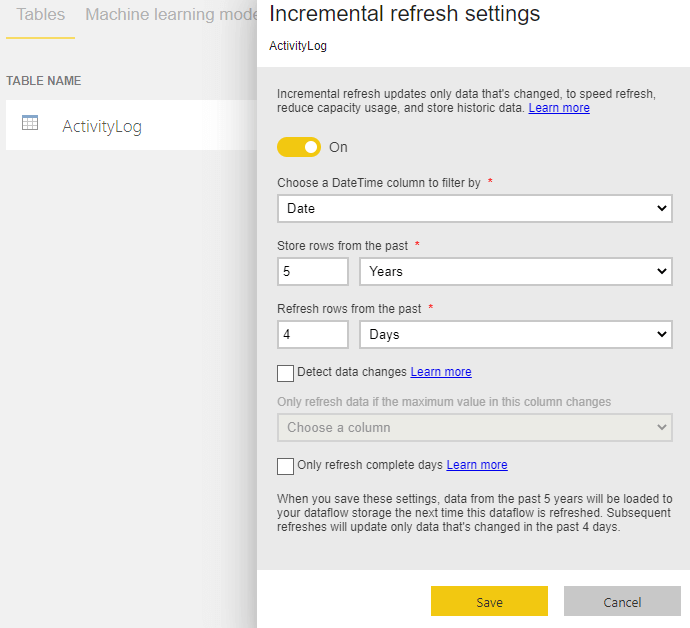

Then we have to select the column that contains our date. Also we need to define over what period of time the data should be stored in the table. In my example I have chosen 5 years and which period should be deleted and reloaded daily. In my example, I chose to delete and reload the last 4 days on a daily basis:

In general, I would always update a few days rolling, as the Activity Log values can still change for a few days backward from when they were initially loaded.

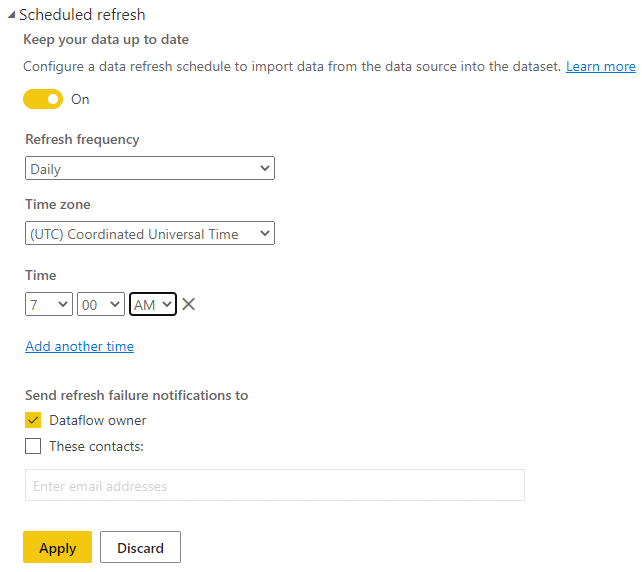

After creating the dataflow with incremental refresh, it still needs to be refreshed. I would trigger this directly manually. After that, you still need to create a daily automatic refresh, chronologically this should happen after the datamart refresh is finished:

Thus, every morning the Power BI Datamart automatically loads the logs of the last days. The latest data is then automatically loaded into the Power BI Dataflow via incremental refresh and in this way materialized.

Limitations and alternative solutions

This article demonstrated the ability to persist a time-limited data source such as the Power BI Activity Log into Dataflow via Datamart and incremental refresh.

For this case, the restriction was to use only Power BI technologies. However, there are still a few points to consider

Archiving in Cold Storage

Although persistence via dataflow is possible, there is still to consider that the data is archived within the dataflow, but also only exists there. This means that with the deletion of the dataflow, the data would also be lost. Backup of Dataflow data is not possible without further steps.

One possibility here would be to use the “bring your own datalake” approach and separate the data from the dataflow.

It would also be possible to archive the data stored in the Datamart, for example with a PowerAutomate Flow that could persist the data in a BLOB storage or in Sharepoint. In such a case, the file-based archive can be accessed at any time and the history can be restored in the future.

Alternatives without Premium or Premium per User

The path described above requires a Premium or Premium per User license for the datamarts and also for the incremental refresh in Power BI Dataflows. If this is not available, a different approach must be taken. You could theoretically also load the data to a Power BI Dataflow and persist the values directly into a Power BI dataset. Here we have again the problem that a complete archiving is not given, since with a reprocessing of the dataset all data would be deleted. The historical data can then no longer be reloaded.

So again, my suggestion would be to persist the data from Power BI Datamart elsewhere, either via PowerAutomate or Azure DataFactory, for example in an Azure SQL, BLOB storage or simply in Sharepoint.

Download solution: